Let’s face it—we love exciting announcements. Why talk about the small technical improvements of a given artificial intelligence (AI) system when you can prognosticate about the coming advent of artificial general intelligence (AGI)? However, focusing too much on AGI risks missing many incremental improvements within the space along the way, very similar to how focusing solely on when cars can literally drive themselves risks missing all the incremental assisted driving features being added to cars all the time.

DeepMind at the forefront

The coverage of AlphaGo, DeepMind’s system that was able to best the performance of professional Go player Lee Sedol, was a game changer. Now there is AlphaZero, AlphaFold, and more. DeepMind has made incredible progress in showing how AI can be applied to real problems. AlphaFold, for example, predicts how given proteins will fold, and, in accurately knowing the shape of given proteins with accuracy unlocks enormous potential in how we think about certain medical treatments.

The Covid-19 vaccine using mRNA was based largely on targeting the shape of the specific ‘spike-protein’. The overall protein-folding problem was something humans were focusing on for more than 50 years1.

However, DeepMind recently presented a new ‘generalist’ AI model called Gato. Think of it this way—AlphaGo specifically focuses on the game of Go, AlphaFold specifically focuses on protein folding—these are not generalist AI applications, as they specifically focus on a single task. Gato can2:

- Play Atari video games

- Caption images

- Chat

- Stack blocks with a real robot arm

In total, Gato could do 604 tasks. This is very different than more specific AI applications that are trained on specific data to optimise only one thing.

So, is AGI now on the Horizon?

To be clear, full AGI is a significant jump above anything achieved to date. It’s possible that with an increase in scale, the path used by Gato could lead to something closer than anything done by AGI, it’s also possible that increasing scale goes nowhere. AGI may require breakthroughs that are as yet not determined.

People love to get ‘hyped’ on AI and its potential. In recent years, the development of GPT-3 from OpenAI was big, as was the image generator DALL-E. These were both huge achievements, but neither has lead to the technology exhibiting human-level understanding, it is also unknown if the approaches used in either could lead to AGI in the future.

If we cannot say when AGI will come, what can we say?

While massive breakthroughs like AGI may be difficult if not impossible to predict with certainty, the focus on AI broadly has been undergoing an incredible upswing. The recently published Stanford AI Index report is extremely useful, in that one can see:

- The magnitude of the investment pouring into the space. Investment, in a sense, partly measures ‘confidence’, in that there has to be a reasonable belief that productive activity could result from these efforts.

- The breadth of AI activities and how the activities are universally showing improving metrics.

The Growth of AI investment

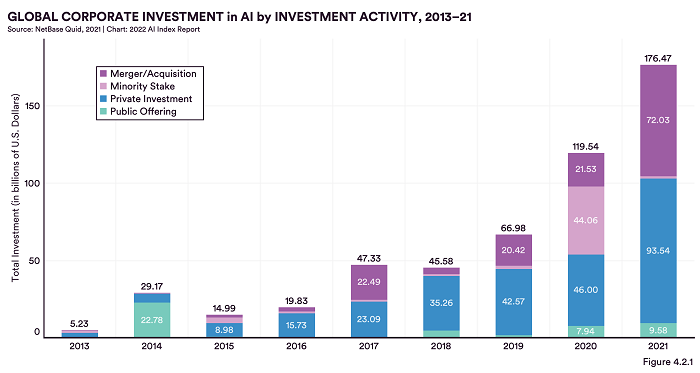

Looking at Figure 1 below, the progression of investment growth has been staggering. We certainly recognise that this is partly driven by the excitement and potential of AI itself, it is also driven by the general environment. The fact that 2020 and 2021 showcased such large figures could be influenced by the fact that the cost of capital was minimal and money was chasing exciting stories with potential profits. Based on what we know today, it is difficult to predict if the 2022 figure will outpace 2021.

It’s also interesting to consider the evolution of the components of investment:

- 2014 was defined by ‘public offering’, which was generally on the smaller scale of the spectrum in other years relative to the totals.

- The primary driver of consistent growth in investment was on the private side, so it appears clear that Figure 1 depicts the cyclical upswing in private investment, which we recognise may not necessarily continue a straight-line upward trend throughout the 2020’s.

Figure 1: Global Corporate Investment in AI by INVESTMENT ACTIVITY, 2013—21

Source: Daniel Zhang, Nestor Maslej, Erik Brynjolfsson, John Etchemendy, Terah Lyons, James Manyika, Helen Ngo, Juan Carlos Niebles, Michael Sellitto, Ellie Sakhaee, Yoav Shoham, Jack Clark, and Raymond Perrault, “The AI Index 2022 Annual Report,” AI Index Steering Committee, Stanford Institute for Human-Centered AI, Stanford University, March 2022.

Historical performance is not an indication of future results and any investments may go down in value.

What activities is the money funding?

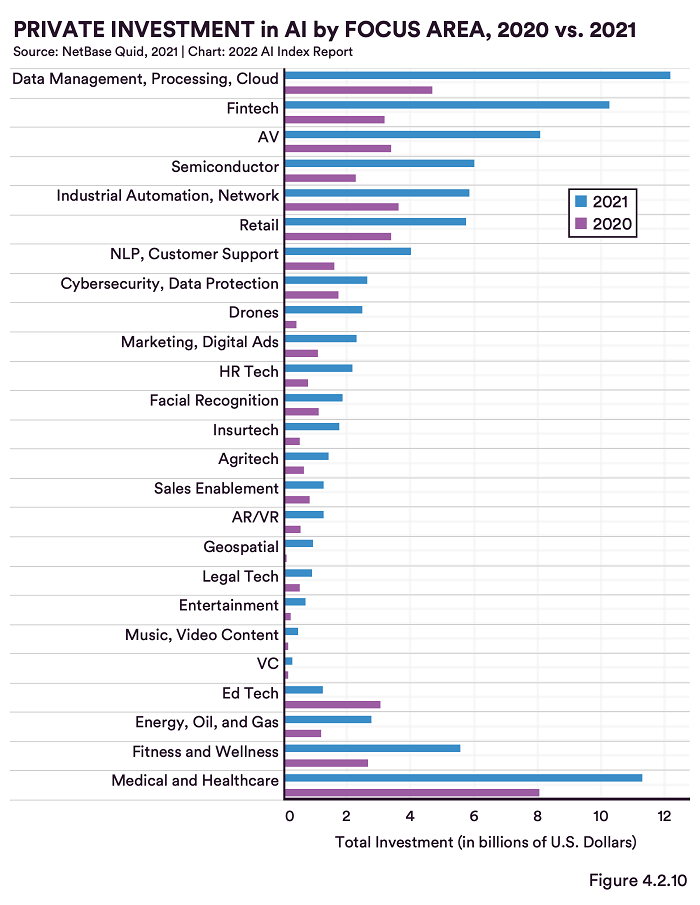

Aggregate investment amounts are one thing, but it’s more concrete to consider specific areas of activity. Figure 2 below is helpful in that regard, providing a sense of change in 2021 relative to 2020.

- In 2021, ‘Data Management, Processing, Cloud’, ‘Fintech’ and ‘Medical and Healthcare’ led the way, each breaking $10 billion.

- It’s notable that in the 2020 (purple) data, ‘Medical and Healthcare’ led with around $8 billion. It puts the relative increase year-over-year for ‘data management, processing, cloud’ and ‘fintech’ in more stark relief.

Figure 2: Private investment in AI by focus area, 2020 vs. 2021

Source: Daniel Zhang, Nestor Maslej, Erik Brynjolfsson, John Etchemendy, Terah Lyons, James Manyika, Helen Ngo, Juan Carlos Niebles, Michael Sellitto, Ellie Sakhaee, Yoav Shoham, Jack Clark, and Raymond Perrault, “The AI Index 2022 Annual Report,” AI Index Steering Committee, Stanford Institute for Human-Centered AI, Stanford University, March 2022.

Historical performance is not an indication of future results and any investments may go down in value.

Is AI technically improving?

This is a fascinating question, the answer to which may have nearly infinite depth and would be covered in a limitless array of academic papers to come. What we can note here is the fact that it involves two distinct efforts:

- Designing, programming or otherwise creating the specific AI implementation.

- Figuring out the best ways to test if it is actually doing what it’s supposed to or improving over time.

I find ‘semantic segmentation’ particularly interesting. It sounds like something only an academic would ever say, but it refers to the concept of seeing a person riding a bike in a picture. You want the AI to be able to know what pixels are the person and what pixels are the bike.

If you are thinking—who cares if sophisticated AI can discern the person vs. the bike in such an image, I grant you that it may not be the highest value application. However, picture an image of an internal organ on a medical image—now think about the value of segmenting healthy tissue vs. a tumour or a lesion. Can you see the value that could bring?

The Stanford AI Index report breaks down specific tests designed to measure how AI models are progressing in such areas as:

- Computer Vision

- Language

- Speech

- Recommendations

- Reinforcement Learning

- Hardware Training Times

- Robotics

Many of these areas are approaching what could be defined as the ‘human standard’, but it’s also important to note that most of them are only specialising in the one specific task for which they were designed.

Conclusion: It’s still early for AI

With certain megatrends, it’s important to have the humility to recognise that we don’t know with certainty what will happen next. With AI, we can predict certain innovations, be it in vision, autonomous vehicles or drones, we must also recognise that the biggest returns may come from activities we aren’t tracking yet.

Stay tuned for ‘Part 2’ where we discuss recent results on certain companies operating in the space.

Sources

1 Source: https://www.cdc.gov/coronavirus/2019-ncov/vaccines/different-vaccines/mrna.html#:~:text=The%20Pfizer%2DBioNTech%20and%20Moderna,use%20in%20the%20United%20States

2 Source: Heikkila, Melissa. “The hype around DeepMind’s new AI models misses what’s actually cool about it.” MIT Technology Review. 23 May 2022.